An increasing number of live events such as conferences, meetings, lectures, debates, radio and TV shows, etc. are nowadays being live streamed on video channels and social networks. These events are transmitted in real time to a large audience, on all types of devices and anywhere in the world. Captioning and live translation1 are seen as essential in order to ensure that these events reach a growing international audience.

How to optimise the comfort and understanding experience of such large audience raises the issue of multilingualism that we discuss in this post.

In the context of the upcoming French Presidency of European Union in January 2022, SYSTRAN has developed a tool called Speech Translator for real-time captioning and translation of single-speaker speeches or multi-speaker meetings. Starting with French or English as the source spoken language, Speech Translator :

- transcribes the original speech, partnering for this task with Vocapia Automatic Speech Recognition2,

- punctuates and segments the automatic speech recognition (ASR) output, making this automatically formatted and corrected transcription available to human reviewer and audience (speech transcription/captioning),

- simultaneously runs machine translation (MT) powered by our best quality translation models towards European Union languages (speech translation/subtitling),

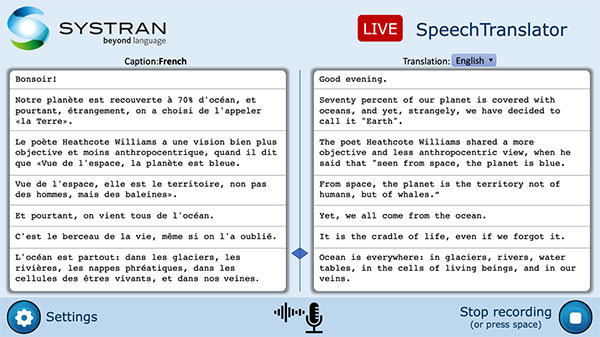

all of this with the lowest latency and in a dedicated and user-friendly interface. The task closely resembles simultaneous interpreting, which performs real-time multilingual translations. The next figure shows a screenshot of our live ST system interface where captions (left) as well as the corresponding English translations (right) are displayed.

SpeechTranslator: Live speech translation system.

Let’s focus on the set up of live translating a French speech into English, to make it accessible to a wider audience.

Given the French speech signal, the system first produces ASR transcriptions which are then segmented, corrected and formatted as French captions and translated into English. The figure below illustrates our ST pipeline. Recent systems perform speech translation following a direct approach where a single network is in charge to translate the input speech signal into target-language text. However, despite the architectural simplicity and minimal error propagation of such new systems, cascaded solutions are still widely used, mainly because of the data scarceness problem shown by direct approaches since most language pairs lack of parallel resources (speech signal/text translations). Moreover, industry applications usually display speech transcripts, alongside translations (as in our previous figure), making cascade approaches more realistic and practical.

Within the standard cascaded framework, researchers have encountered several difficulties, including:

- Adaptation to ASR transcripts: ASR hypotheses exhibit very different features from those of the texts used to train neural machine translation (NMT) networks. While NMT models are often trained with clean and well-structured text, spoken utterances contain multiple disfluencies and recognition errors which are not well modelled by NMT systems. In addition, ASR systems do not usually predict sentence boundaries or capital letters correctly, as they are not reliably accessible as acoustic cues. Typical ASR systems segment the input speech using only acoustic information, i.e., pauses in speaking, which greatly differ from the units expected by conventional MT systems.

- Low latency translations: Systems using longer speech segments may span multiple sentences. Thus, causing important translation delays, which harms the reading experience. Limited translation delays are typically achieved via starting translation before the entire audio input is received, a practice that introduces important processing challenges.

In the following we give further details on the challenges encountered in the implementation of our ST system and outline the actions taken to address such issues.

Adaptation to ASR transcripts

A vast amount of audio sources are nowadays being produced on a daily basis. ASR systems enable such speech content to be used in multiple applications (i.e. indexing, cataloging, subtitling, translation, multimedia content production, etc). Details depend of individual ASR systems but their output, commonly called transcripts, typically consist of plain text enriched with time codes. The figure below illustrates an example of ASR transcript.

Notice time codes and confidence scores for each record. Latency records indicate pauses in speech. Next, we enumerate a few of the most challenging features present in speeches considered in our project (French political speeches). The next picture illustrates two transcripts containing typical errors and disfluencies.

| en complément des tests ça l’ hiver la grande nouveauté de la reprise olivier veran on y reviendra sera le déploiement des auto-tests |

| depuis nous avons euh chercher une solution qui puisse être accepté par les groupes politique à propos de la 3ème partie de cet amendement |

- Sentence boundaries. Speech units contained in transcripts do not always correspond to sentences as they are established in written text, while sentence boundaries provide a basis for further processing of natural language.

- Punctuation. Partially due to absence of sentence boundaries, no punctuation marks are produced by ASR systems in real time mode, a key feature for the legibility of speech transcriptions.

- Capitalisation. Transcriptions do not include correct capitalisation. A true-casing task is needed to assign each word its corresponding case information, usually depending on context. This is the case of the first words in our examples.

- Number representation. Numbers provide a challenge for transcription, in particular number segmentation. See for instance the example of our previous figure where the uttered number ‘2001’ is wrongly transcribed as a sequence of three numbers: 2, 1000 and 1. Both transcriptions may be possible, only the use of context can help pick the right one.

- Disfluencies. Speech disfluencies such as hesitations, filled pauses, lengthened syllables, within-phrase silent pauses, repetitions are among the most frequent markers of spontaneity. Disfluencies are the most important source of discrepancies between spontaneous speech and text. A hesitation (euh) is transcribed in our previous example.

- Recognition errors. ASR systems are error-prone. Multiple misrecognition types exist. For this work, we mainly consider errors due to homophones, missed utterances, wrongly inserted words and inflection changes.

- Homophone : (ça l’ hiver → salivaires)

- Wrongly inserted word: (on)

- Inflection changes : (chercher → cherché ; accepté → acceptée ; politique → politiques)

Adaptation is achieved by enriching speech transcripts and MT data sets so that they more closely resemble each other, thereby improving the system robustness to error propagation and enhancing result legibility for humans. We took French/English bitexts in the political discourse domain and randomly inject noise in the French side, simulating errors generated by an ASR system, to obtain parallel noisy French/clean French/clean English texts that we used to feed our models.

Low latency translations

While current MT systems provide reasonable translation quality, users of live ST systems have to wait for the translation to be delivered. This greatly reduces the system’s usefulness in practice. Limited translation delays are typically achieved via starting translation before the entire audio input is received, a practice that introduces important processing challenges.

We tackle this problem by decoding the ASR output whenever new words become available. The next figure illustrates the inference steps performed by our networks when decoding the ASR transcript of the French utterance: “le palais est vide (pause) le roi est parti il revient demain”.

Column FR indicates (in red) words output by the ASR at each time step t. Columns fr and en indicate the corresponding output of our models (respectively FR2fr and FR2en) at time step t.

Notice that input streams (column FR) remove prefix sequences when an end of sentence (eos) is predicted by the model followed by N words (N=2 in our example). This strikes a fair balance between flexibility and stability for segmentation choices, allowing the model to reconsider its initial prediction while ensuring consistent choices to be retained. Notice also that after predicting (eos), words output by our models consist of the same words output by the ASR. This allow us to identify the prefix to use when building new inputs (underlined strings). The prefix also contains the last token predicted for the previous sentence followed by (eos) to predict the case of the initial word of each sentence. Note that French captions shown in column fr are passed to our fr2en model (after removing the (eos) and succeeding words when available) to generate the final English hypotheses.

We carefully prepared our training datasets following the previous noise injection and low latency tricks that reproduce the noise present in data considered for inference.

Results

For evaluation we use testsets from two multilingual ST corpus, Europarl ST3 and MTEDX4 (Multilingual TEDx). The next table illustrates BLEU results obtained by the English translations following three different pipelines:

- fr2en translates French ASR hypotheses with a model trained from well-structured text (without adaptation)

- FR2fr+fr2en concatenates the cleaning model with a translation model built from well-structured data

- FR2en directly translates French ASR hypotheses into clean English text.

As expected, the fr2en model, trained on clean parallel data, exhibits the worst results. Differences in training and inference data sets significantly impact performance. Concerning models learned using noisy source data, best BLEU performance is achieved by the FR2en model. We hypothesise that FR2fr+fr2en suffers from error propagation, which means errors introduced in the first module FR2fr can not be recovered by the fr2en module. Despite its lower BLEU score, the model FR2fr+fr2en has the benefit to output French captions, which is an important asset for some industry applications, and in our present case of live speech captioning and translation.

In terms of latency, the presented framework also delivers translations with very low delay rates. Each new word supplied by the ASR produces a new captioning and translation hypothesis, which is immediately displayed to the user. Even though the segment being decoded can sometimes fluctuate (translation changes when including additional words), in practice we encountered very limited fluctuations, impacting always the last words of the hypotheses being decoded.

Elise Bertin-Lemée, Guillaume Klein and Josep Crego, SYSTRAN Labs

[1] In this work we use caption to refer to a text written in the same language as the audio and subtitle when translated into another language.

[2] https://www.vocapia.com/voice-to-text.html

[3] https://www.mllp.upv.es/europarl-st/

[4] http://www.openslr.org/100/